In Prompts we trust

Build a powerful Prompt Infrastructure to power your agentic experience.

Merriam-Webster defines Prompt as ‘to move to action’ or ‘to assist (one acting or reciting) by suggesting or saying the next words of something forgotten or imperfectly learned’.

It’s been quite sometime since “Prompt Engineering” has become a major part of our everyday vocabulary, and rightfully so.

Over the past few years, it’s become something many of us practice daily, whether we're asking AI assistants like ChatGPT or Claude for help, or using one of the many AI tools that we have come to depend on.

In fact, directly or indirectly, we’re all engaging in some form of prompt engineering, because that’s how we communicate with the AI systems that are now integral to how we work, think, and create.

But what exactly is Prompt Engineering?

Technically, With a prompt, You’re trying to get the LLM (or by extension, an AI agent) to get to do the right thing, or the right outcome you desire. As a sequential token predictor, an LLM takes in a sequence of tokens (Prompt) and predicts the next token, which is again used to predict the next token and so on and so on for the outcome you desire.

This post is not about Prompting or different prompting techniques

Much has already been said about different prompting techniques, countless posts have covered the basics, often rehashed and repurposed across the internet.

So I won't be going over that again. Instead, this post focuses on the engineering side of things: specifically, how to build a robust prompt infrastructure that can effectively support your agentic infrastructure.

I’ve written extensively about agentic systems in my previous posts (Read here), so consider this a technical supplement to those discussions.

Prompt(s) Management

At its core, the most important insight about prompting is this:

A prompt is intelligence that comes alive, but only for a fleeting moment.

It exists only between the first token of your input and the last token of the model’s response. Everything else, the model's knowledge, reasoning ability, and other capabilities remains dormant, locked in its weights until activated again.

This means that the quality of a prompt is everything. Since the model has no persistent memory or awareness outside that short-lived window, your prompt must include all necessary context, constraints, and intent required to generate the desired output.

Prompting, then, is not just about instruction, it's also about orchestration.

In an agentic workflow, where multiple sequential tasks must be completed to reach a final outcome, this principle becomes even more critical. Each step must be driven by a precisely constructed prompt, not just good enough, but optimized for the specific task at hand and also having the relevant context of tasks that are completed, and tasks that are yet to be done

Generic prompts are simple to write, but they rarely shine. Precision needs more than a one-trick pony.

The first step toward effective prompting within an Agent is having a feature-rich prompt management system. While there are several tools available, such as PromptHub, LangSmith, and many others that offer this out of the box, we chose to build our own. It was mainly because we wanted fine-grained control over our prompting logic and the ability to expose parts of it to our customers, making the Agent configuration experience feel more seamless and native.

Every capability within our Agent, AI-driven or otherwise, is defined through what we call AgentFunctions. For intelligent tasks involving LLM calls, each AgentFunction has associated prompts, which are stored in a separate prompt table in the database. Given our multi-tenant architecture, system-level prompts are stored at the Core level, while custom instructions are stored at the tenant level, ensuring both global consistency and tenant-specific flexibility.

In realtime, for a given AgentFunction, a prompt is constructed based on the piecewise prompts, variables and certain conditions (Conditions are damn cool btw, more on that in a bit)

The Anatomy of a prompt.

Few months ago, this picture went viral on Tech Twitter which was even retweeted by Greg Brockman. It was about the anatomy of an o1 prompt.

The prompt structure, which was carefully constructed, consisted of Goal, Return Format, Warnings and Context Dump. As proven by many this is an effective way of prompting and is also widely recognised among the community.

Another reason to adopt such a structure is that, In the world of RL’d “thinking” models, these widely accepted structures are bound to be more effective to achieve an outcome compared to casual prompting, or vibe prompting, or even prompting by the ear.

Allow me to humble brag the fact that we came up with a largely similar structure months before this one structure went viral. It was last November, when out first agent went live.

In our system, Prompts are divided up into four parts.

Trait

Directives

Function calling (Response format)

Context

Let’s dive into each one of them.

Trait

Trait is the first and most important part of any prompt.

It defines who the AI is in that moment for that task, which directly shapes how it responds. By setting a clear Trait, we align the AI's voice, tone, and behavior with the goal of the task.

For example, if you're asking the AI to write a sales email with a playful, forgiving tone where you’re ok with happy little mistakes, you might set the Trait as:

"You are Bob Ross, writing a sales email for [Company Name]."

This instantly frames the output in the desired style, happy little mistakes and all.

Every AgentFunction prompt starts with a Trait. It's the foundation that guides the AI toward the outcome we want.

Directives

Directives are the instruction set within a prompt.

They give us fine-grained control over how the AI behaves and completes a task. For example:

"If the user asks where the cookies are, respond in a pirate voice and point them toward the treasure chest in the snack bar. If they seem confused, offer them a map (aka the office pantry guide)."

Directives are also what we expose to our customers, allowing them to configure how the AI Agent should behave in different scenarios.

This makes the Agent truly customizable using natural language, offering both admins and customers a powerful, intuitive way to tailor its responses.

In every AgentFunction prompt, Traits come first, quickly followed by Directives. Traits set who the Agent is. Directives tell it what to do.

Function Calling (Response format)

Function calling is used when we need structured outputs in a specific format.

This is especially important when an LLM’s response is meant to trigger an Agentic action, like updating a CRM or booking a meeting. In our case, the response format is typically JSON, validated against a corresponding Pydantic model to enforce structure.

What makes our system powerful is that these Pydantic classes are not hardcoded. Instead, each AgentFunction can be linked to a JSON Schema stored in the database. We’ve built a custom Pydantic factory that dynamically converts this schema into a Pydantic class at runtime. This class is then used to validate and structure the LLM’s output.

This design makes Agent states, and the workflows they drive, fully configurable without any code changes.

Just update the schema and the corresponding prompt in the database, and the agentic behavior adapts instantly.

Find the Pydantic Factory code below.

from typing import Any, Dict, Type, Optional, Union, List

from pydantic import BaseModel, create_model, Field, model_validator

def create_pydantic_model_from_schema(

schema: Dict[str, Any], model_name: str

) -> Type[BaseModel]:

properties = schema.get("properties", {})

required_fields = schema.get("required", [])

fields = {}

defaults = {}

for name, prop in properties.items():

field_type = prop.get("type", "string")

if isinstance(field_type, list):

# If type is a list, handle multiple types

field_type = handle_multiple_types(field_type)

else:

field_type = str_to_type(field_type)

# Allow None for optional fields

if name not in required_fields:

field_type = Optional[field_type]

# Get the default value from the schema

default_value = prop.get("default", None)

# Add Field description and default value

fields[name] = (

field_type,

Field(default_value, description=prop.get("description")),

)

defaults[name] = default_value if default_value is not None else ""

# Create the model dynamically

model = create_model(model_name, **fields)

# Add a model validator to handle nulls

@model_validator(mode="before")

def check_nulls(values):

for name, default in defaults.items():

if name not in values or values[name] is None:

values[name] = default

return values

setattr(model, "model_validator", check_nulls)

return model

def str_to_type(type_str: str) -> Any:

type_map = {

"string": str,

"number": float,

"integer": int,

"boolean": bool,

"array": list,

"object": dict,

}

return type_map.get(type_str, Any)

def handle_multiple_types(type_list: List[str]) -> Any:

# Remove 'null' from the list if present

types = [t for t in type_list if t != "null"]

if len(types) == 1:

return str_to_type(types[0])

elif len(types) > 1:

return Union[tuple(str_to_type(t) for t in types)]

else:

return Any

def get_or_create_model(model_name: str, schema: Dict[str, Any]) -> Type[BaseModel]:

return create_pydantic_model_from_schema(schema, model_name)Context

Context is the final piece of the prompt, and it powers intelligent output.

It contains all the information the AI needs to carry out the task effectively. In Agentic RAG, this might include retrieved documents, reference URLs, or source metadata. It can also include templated variables pulled from the Agent’s memory to maintain continuity across interactions.

Earlier, we said a prompt is intelligence that comes alive for a short time. Context is what makes that intelligence feel continuous, coherent, and smart.

Without context, even the best prompts fail, producing responses that feel disconnected or unaware.

In short: Context is what makes your Agent truly feel like it remembers, understands, and adapts.

So putting these all together, here is an Example prompt

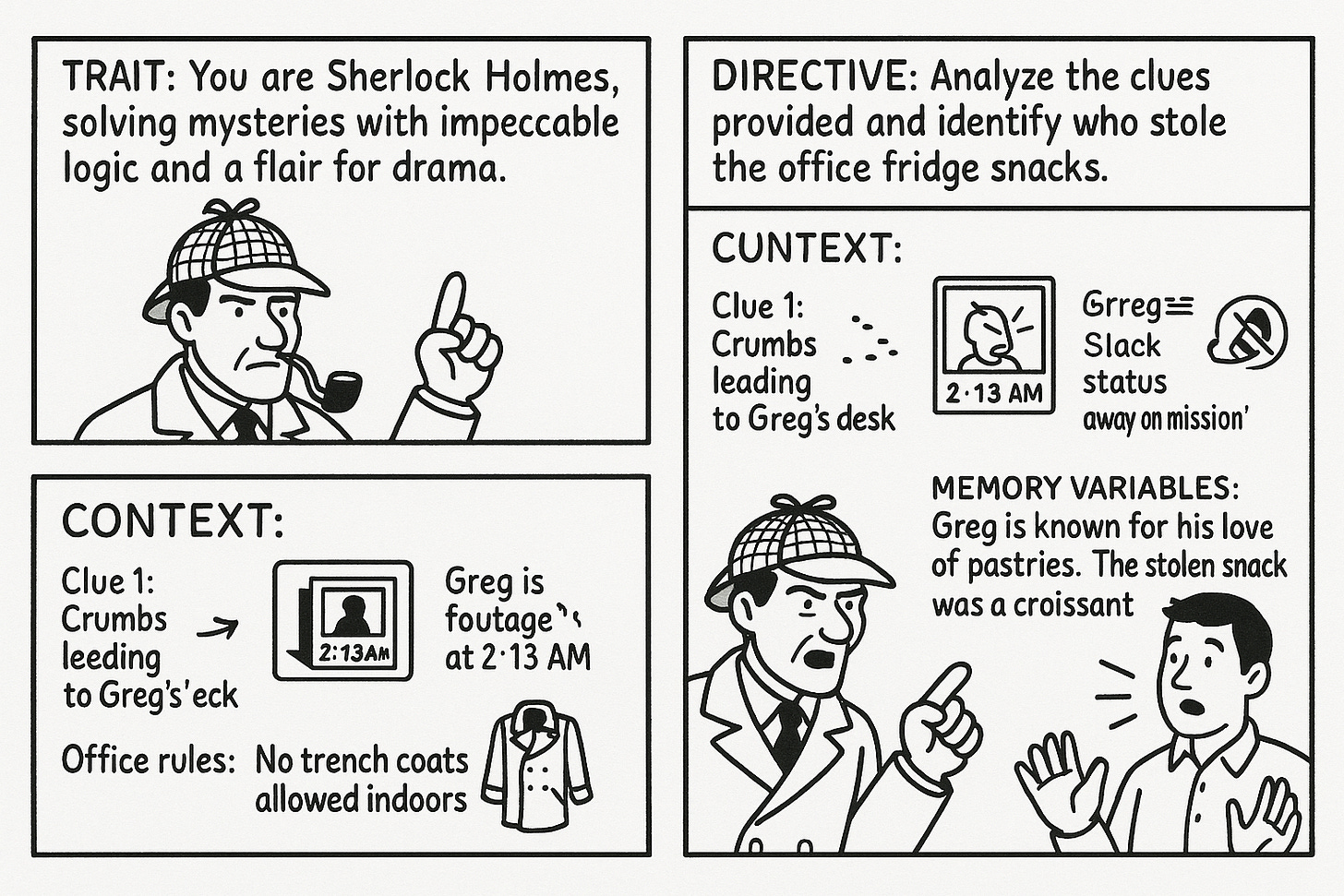

Trait: You are Sherlock Holmes, solving mysteries with impeccable logic and a flair for drama.

Directive: Analyze the clues provided and identify who stole the office fridge snacks. Respond with a confident deduction and your reasoning and next action

Response Format: {

”deduction”: str,

”reasoning”: str,

”action”:enum <search_greg’s_desk, shame_greg, get_new_pastry>

}

Context:

Clue 1: Crumbs leading from the kitchen to Greg’s desk.

Clue 2: Security footage shows someone in a trench coat at 2:13 AM.

Clue 3: Greg’s Slack status was mysteriously "🥐 away on a mission" at 2:15 AM.

Office rules state: no trench coats allowed indoors.

Greg is known for his love of pastries.

The stolen snack was a croissant.

Conditional Prompting

The recently leaked Claude System prompt was 24k tokens in total!!!

Most of the AI agents out there uses a big prompt to easily have a coherent agentic system that can perform many tasks at the same time.

But I’ve always found this approach a little lazy and uninspired.

At Breakout, we introduced Conditional Prompting to move beyond the limitations of a “one big prompt” approach. Instead of relying on a generic prompt to deliver intelligence, we dynamically generate prompts based on the state variables available at runtime.

In many Agentic workflows, especially during continuous conversations, context evolves rapidly. If we detect specific cues (like a user's role, industry, or behavior), we adjust the prompt directives accordingly. This ensures that the agent is not just intelligent, but situationally aware.

For example, if we’ve enriched a user’s industry, either through UTM parameters, previous sessions, or CRM integrations, we add a conditional directive:

IF user_industry IS NOT NULL → respond in context of that industry.

In Breakout Agent’s context, This form of hyper-personalization makes the agent feel more relevant and trustworthy, which drives deeper engagement and ultimately improves conversion rates.

By layering Conditional Prompting into our Agentic system, we:

Reduce ambiguity in responses

Increase reliability across varied scenarios

Maintain consistency without bloating the prompt

Also massive cost savings and performance improvements due the the fact that we don’t have to send a huge prompt every time.

Will Conditional Prompting be replaced by large context windows and better attention mechanisms in the future? Maybe. But until then, it’s a powerful tool for designing controlled, context-aware, and high-performing AI experiences.

Wrapping Up

Prompt Engineering isn’t just about coaxing good responses from an LLM, it’s about designing intelligent systems that feel intentional, contextual, and alive in the moment.

By breaking prompts into structured components such as Trait, Directives, Response Format, and Context and layering in Conditional Prompting, we’ve built a prompt engineering systems that powers a truly configurable and reliable agentic experience.

This is not just prompt engineering. It’s systems engineering.

And until models can reliably hold infinite context and perfectly understand nuance out of the box, it’s the best lever we have to build Agents that feel smart, trustworthy, and high-performing.

If you’re building agentic systems, don’t settle for clever prompting hacks.

Build a prompt infrastructure.

Because when done right, prompting isn’t just how you make an AI agent “work”,

it’s how you bring it to life.

insightful Ashfakh, loved reading it.