The Age of Agents

The Autonomous Era is already here.

“The best way to predict the future is to invent it.” - Alan Kay

From Asimov’s I, Robot to The Electric Slate, Metropolis’s Maria to HAL 9000, from JARVIS to Her, some of our best stories have dreamt aloud about machines that could think, feel, and act with purpose.

Automatons, androids, agents. They’ve all flickered across our screens as both warnings and promises. But up until two-three years ago, they all just felt like a promise of the future.

But that’s no longer the case. The Autonomous Era is finally here. And it’s here to stay.

These last few years, The future of large‑language‑model (LLM) applications has been invented in real time and it’s moving at a pace so fast that it has become extremely hard to keep up.

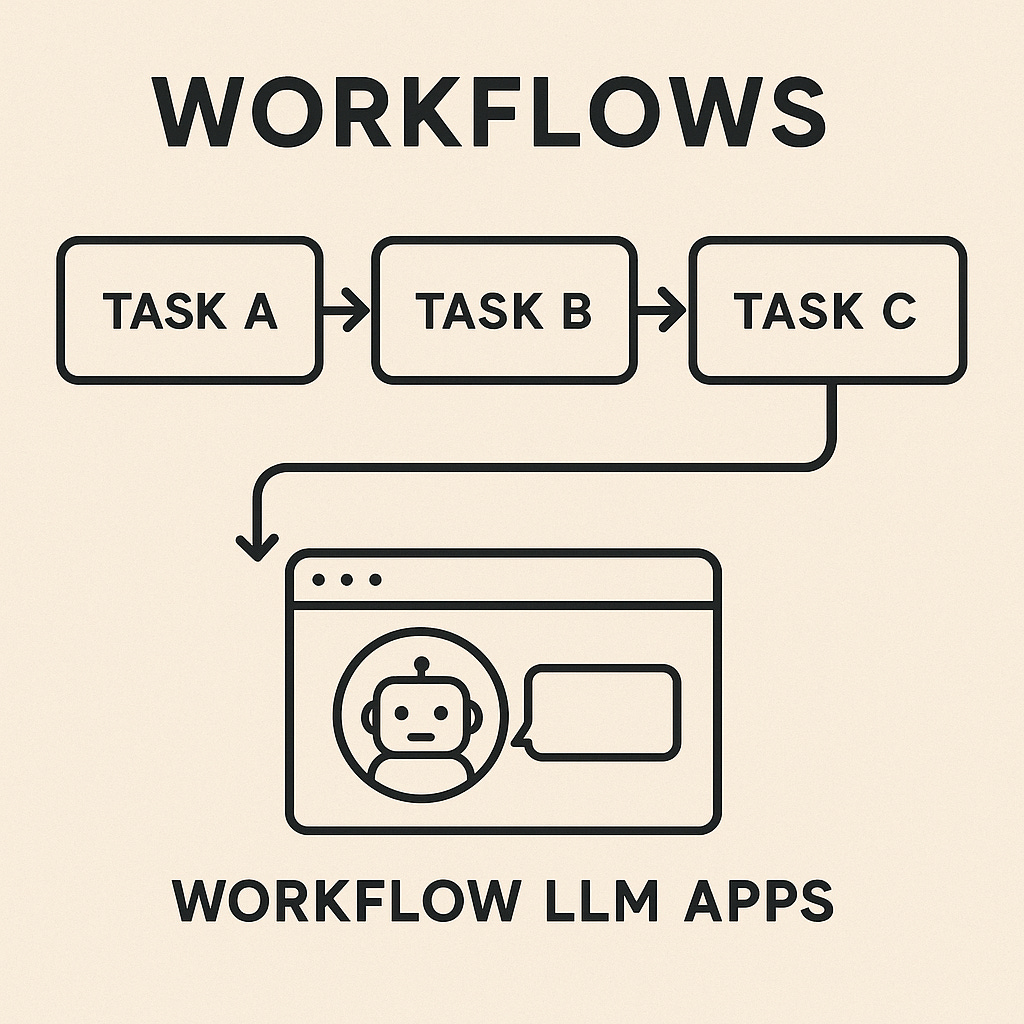

2023 was the era of workflows. We built multistep, hand‑crafted LLM pipelines. This brought about a flavour if intelligence to what was our traditional deterministic software.

But that was just the beginning

By mid‑2024 we discovered ReAct‑style agents that could reason and decide which tool to call next. The first time I witnessed a ReAct Agent, I was truly mesmerised. This was a step shift in how jobs are being done. It was a glimpse into the possible overhaul of the entire Software Industry to a more Services outcome, Without the massive ops overhead of course.

Quite containerised agents sitting in some server in a cold dark datacenter quitely doing their jobs. “The Ideal Worker” (Sounds a little dystopian, but these are machines :P)

But just six months later, In 2025 we’re witnessing a quieter but more profound shift, Planning Agent architectures that decompose, delegate and adapt the way an experienced project manager would.

Below is a practical tour of that evolution, plus copy‑and‑pasteable code you can steal for your own stack (All code is merely representative. I am working on open sourcing a comprehensive framework for Agent creation, But that’s for another time 🙂)

Boring old workflows FTW

I’ve written extensively about Workflow based *agents, and I still believe they will be the back bone of a lot of the more deterministic, performance based software for the foreseeable future. You can read more about them on my previous posts.

Linking the first part here:

To recap, Workflow LLM apps chained calls in a fixed order. You’d wire together “summarise → extract → generate” and hope for an outcome that best fits your needs.

class WorkflowEngine:

def __init__(self, llm_client):

self.llm = llm_client

self.steps = []

async def execute(self, input_data):

ctx = {"input": input_data}

for step in self.steps:

resp = await self.llm.generate(

messages=[

{"role": "system", "content": step.system_prompt},

{"role": "user", "content": step.format_input(ctx)}

]

)

ctx[step.output_key] = resp.content

return ctx["final_output"]

LLM Workflows are predictable, more performant and more reliable for production use cases. But they’ve some major disadvantages too. It’s inflexible and it doesn’t handle all edge cases on its own much like traditional software.

✅ Predictable · ❌ Inflexible · ❌ Breaks on edge cases

Autonomous Agents: The ReAct Breakthrough

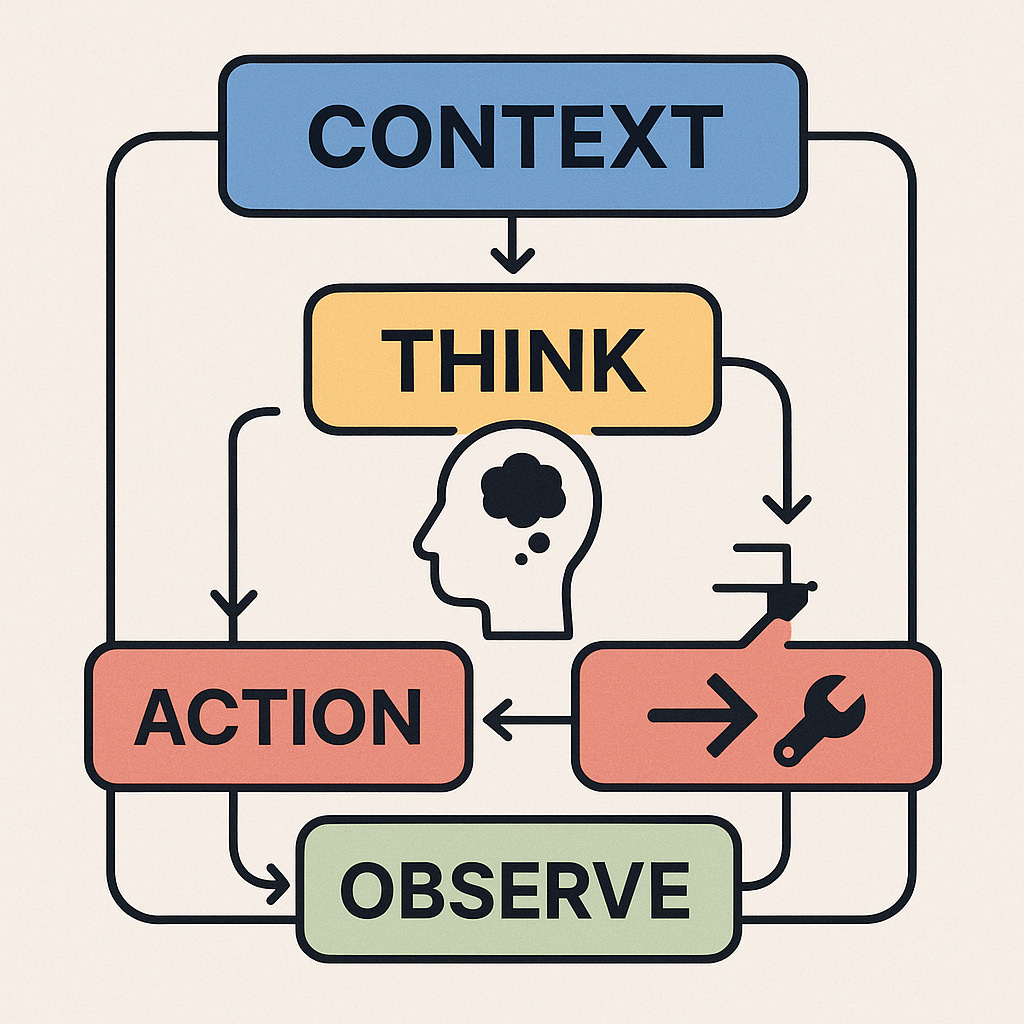

ReAct (“Reason + Act”) let models think step‑by‑step, decide which tool to use, observe the result, then think again. When it first came out, It felt like magic and quickly became the backbone of projects like AutoGPT and BabyAGI.

Paper: ReAct: Synergizing Reasoning and Acting in Language Models

The basic structure of a ReAct agent is quite simple. It takes a Context (Query, Problem) and runs a Think, Action, Observe loop until it achieve the desired outcome.

It takes the problem, and forces a thought on it with respect to the context acquired. The initial context contains the tools available.

Then it identifies the next action based on tools available. Once the tool is identified, we used the function calling paradigm to construct the arguments and call that tool (function)

Observe forces thinking again with the additional context of the action outcome

This loops run till we arrive at a result.

from typing import Literal, Optional

from pydantic import BaseModel

class AgentAction(BaseModel):

type: Literal["think", "use_tool", "result"]

content: Optional[str] = None

tool_name: Optional[str] = None

parameters: Optional[dict] = None

class ReactAgent:

def __init__(self, llm_client, tools):

self.llm = llm_client

self.tools = tools

self.memory = []

async def solve(self, goal):

self.memory.append({"role": "user", "content": goal})

while True:

action = await self.llm.generate_structured(

messages=self.memory,

response_model=AgentAction # think / use_tool / result

)

if action.type == "think":

self.memory.append({"role": "assistant", "content": action.content})

elif action.type == "use_tool":

outcome = await self.tools.execute(action.tool_name, action.parameters)

self.memory.append({"role": "tool", "content": str(outcome)})

elif action.type == "result":

return action.content

ReAct Agents gave us the first taste of what autonomous agents were capable of. Now, with the advent of Thinking models, this architecture has become more capable of handling more complex tasks on it’s own. Making it more flexible and task aware. But it also has a problem where it can fall into endless loops without no end in sight.

A silicon worker, Trapped in the sands of time forever.

✅ Adaptive · ✅ Tool‑aware · ❌ Can get lost in endless reasoning

The Planning Solution

So how do we solve this endless loop problem?

Turns out... we need Managers after all.

Just like in the real world, if you leave a worker alone too long, they’ll either go rogue or spend hours perfecting a slide deck no one asked for. So we add structure.

Every product team has backlogs, epics, and todos to tame complexity.

Planning Agents borrow that same discipline. They don’t just think - they plan, prioritize, and move on.

from typing import List, Optional

from pydantic import BaseModel

class TodoItem(BaseModel):

content: str

result: Optional[str] = None

class TodoList(BaseModel):

__root__: List[TodoItem]

def __iter__(self):

return iter(self.__root__)

def __getitem__(self, item):

return self.__root__[item]

def __len__(self):

return len(self.__root__)

class PlanningAgent:

def __init__(self, llm, tools):

self.llm = llm

self.tools = tools

self.todos = []

# --- Phase 1: Plan ---

async def create_plan(self, problem):

prompt = f"""Create the minimal TODO list to solve: {problem}

- If a single step suffices, output ONE todo

- Break down only genuinely complex problems"""

self.todos = await self.llm.generate_structured(

[{"role": "user", "content": prompt}],

response_model=TodoList

)

# --- Phase 2: Execute ---

async def execute_plan(self):

for todo in self.todos:

agent = ReactAgent(self.llm, self.tools)

todo.result = await agent.solve(todo.content)

# --- Phase 3: Synthesize ---

async def summarise(self):

answers = "\n".join(t.result for t in self.todos if t.result)

return await self.llm.generate(

messages=[{"role": "user", "content": f"Synthesise:\n{answers}"}]

)Why it wins

Strategise – Analyse the goal, decide if and how to break it up.

Delegate – Spawn fresh ReAct sub‑agents, each with isolated memory and a 5–10‑step budget.

Monitor & Adapt – If a sub‑task fails, create a recovery todo.

Synthesize – Compress all partial results into one coherent deliverable.

Some Results in the Wild

Coding agents finish 20–40 % faster on open‑source benchmarks when upgraded from ReAct to Planning Agents.

RAG pipelines (retrieval‑augmented generation) avoid hallucinations by treating “find missing citation” as a discrete todo.

Product‑support bots self‑heal: when a tool API fails, they queue a “fallback‑search” todo instead of crashing.

Planning beats prompting. Once you teach your LLM to ask, “What’s the minimal todo list?” you unlock a flywheel of autonomy, reliability and scale.

Move your ReAct Agents.

Wrap ReAct in a Todo Loop – Keep your existing ReAct code and just add a lightweight planner on top.

Bound sub‑agent steps – 5–10 reasoning loops is usually enough.

Persist state – Store todo status so the agent can resume after a crash.

Measure – Track success‑rate‑per‑todo and average steps‑per‑todo. These metrics surface hidden bottlenecks.

Iterate – Use failure cases to refine your planning prompt (just like you refine user stories in Agile).

What Next?

Meta‑planning – Agents that optimise their own planning heuristics.

Org charts – Networks of specialised agents mirroring real‑world teams.

Continuous learning – Plans evolve as the environment shifts (A/B tests, KPI feedback, etc.).

We’re moving from “ask the model for an answer” to “hire the model as a team lead”.

The line between software and organisation design is blurring fast.

The Future Is Agentic

The most advanced systems today don’t just respond, they reason. They plan, adapt, delegate, and self-correct.

We’re no longer designing functions. We’re designing organizations of machines. Teams of collaborative, reasoning agents.

The Age of Agents isn’t just coming. It’s already here.

Have questions or want a review? Leave a comment or send me a message 🙂