The Automation Fallacy

Why your AI Agents will fail.

The biggest promise of AI Agents is that they’ll reliably perform tasks on our behalf. In theory, they could step in as a drop in replacement for anyone handling that job and keep things running without intervention, except for the occasional policy mandated checks.

That vision is exciting, but In reality, we’re still a ways off from complete autonomous agents

Right now, what works best is a Human-in-the-Loop (HITL) approach. These systems don’t replace people entirely but rather automate large, repetitive chunks of work so the human can step in only where it matters most. This doesn’t just make life easier for individuals, it unlocks a lot of value for teams. It helps them stay leaner, yet still grow faster.

The Automation Fallacy

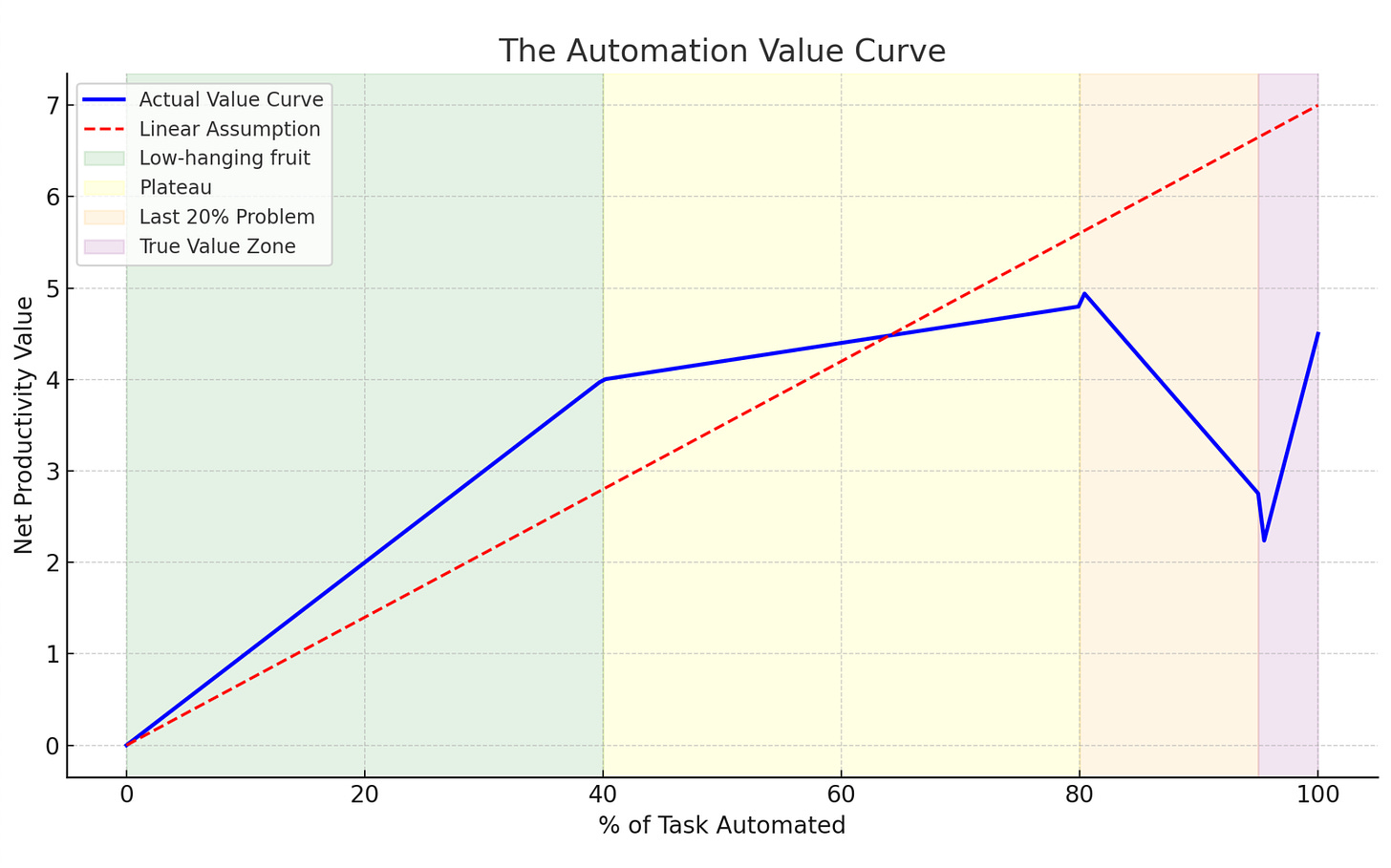

It’s tempting to think the math is straightforward. If an AI automates 50% of a task, the human becomes 2x more productive. If it automates 80%, suddenly they’re 5x more productive. Logical, right?

Unfortunately, that assumption is completely wrong.

Most tasks just aren’t linear in nature. Automation almost always starts by tackling the low hanging fruit which consists of the repetitive, structured steps that are easiest to codify. As the system improves, you might reach 80% coverage and feel confident you’ve unlocked huge value.

But the catch is that the last 20% is the hardest part, and it gets even harder because of the context loss.

When a person has to step in at that point, they’re not continuing smoothly from where the AI left off. Instead, they face three compounding problems:

Lost context – they don’t know how or why the agent made certain decisions.

Extra effort – they may need to validate or even redo what the agent did before moving forward.

Broken flow – Fixing up stuff often takes longer than just doing the whole job from scratch.

Rigid flow – the agent’s process or agentic workflow is often too fixed. It doesn’t adapt or learn from how the human finishes the task, so the automation is stuck at 80% instead of flexibly moving toward 90% and even more.

So that final 20% can sometimes take more time than it would have to complete the entire task manually. Instead of making people 5x more productive, partial automation risks slowing them down.

This is also why AI agents often fails during pilots, even if with their fantastic capabilities and mind blowing demos.

To give an example, a coding agent might generate 80% of your code, They can one shot entire classes, data models, migrations, everything. That’s impressive and saves time upfront. But if the structure isn’t quite right with respect to your design principles, or if the logic doesn’t fully match the business rules, the developer now has to dive in and untangle someone else’s half-finished work.

And if you’ve worked with developers, you know that there’s nothing they hate more than cleaning up someone else’s half-baked code, except for maybe meetings 😜

This isn’t unique to coding tho. it’s the same dynamic in other domains like accounting, report generation, customer support, or data analysis. Automating the “easy” parts feels like progress in theory, but without proper context handoff, it just doesn’t make much difference to end user.

Where Partial Automation Still Works

This doesn’t mean partial automation has no value, It just means it must be designed thoughtfully. Two principles matter most:

Seamless handoff: The AI needs to pass context in a way that makes it easy for a human to pick up where it left off. Summaries, structured outputs, or clear audit trails can eliminate the need for reverse engineering.

End-to-end sub-tasks: Instead of doing 80% of every job, agents should fully own specific, repeatable sub-jobs. Do end to end account mapping, Create Draft entries. Triage and route the customer ticket. The human then steps in only when judgment or nuance is required.

This shift in design turns HITL into an amplifier rather than a bottleneck.

But the real silver bullet is Agentic Memory.

The real unlock for AI agents won’t just come from better models or faster automation, rather it will come from adaptiveness. The biggest frustration with today’s partial automation is that it’s rigid. Agent makes its best guess, the human cleans it up, and the agent learns nothing from that cleanup. Next time, the same gaps appear, and the cycle repeats.

What’s missing is agentic memory, a policy layer that allows agents to watch, record, and internalize how humans finish the last mile. Instead of getting stuck at 80% coverage, the system uses every correction as fuel to stretch toward 90%, even 95%.

This creates two powerful effects:

Compounding automation – over time, the agent doesn’t just do more of the work, it does more of the right work, tailored to the team’s rules and preferences.

Stickiness – because the agent adapts to the unique workflows of its users, switching away from it feels costly. It doesn’t just automate tasks; it remembers how you do them.

In other words, the way past the Last 20% Problem isn’t just brute force improvements. it’s giving agents memory and adaptiveness, so they become collaborative partners that grow more capable the longer they’re in the loop.

The true promise of AI Agents isn’t about nibbling away at tasks in percentages.

It’s about taking full ownership of well-bounded, repeatable workstreams so that humans can focus on the parts of the job that require context, creativity, and/or decision-making.

Instead of thinking, “We’ve automated 80% of this task, so we’ve doubled productivity,” the better framing is: “We’ve automated this entire sub-task end-to-end, so humans never have to think about it again.”

Humans and agents should fully own meaningful slices of work, end-to-end. Like a well oiled machine, Their work should be complimentary, not tripping each other up.

Great blog, very clear as a person just starting in agents very well explained.